In my previous post I wrote about Geometric Algebra generalities. We saw that the tridimensional space generate a geometric algebra of dimension \(2^3 = 8 = 1 + 3 + 3 + 1\) composed of four linear spaces: scalars, vectors, bivectors and pseudo-scalars.

The elements of the subspaces can be used to describe the geometry of euclidean space. The vectors are associated to a direction in the space, bivectors are associated to rotations and the pseudo-scalar corresponds to volumes.

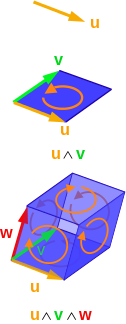

The product of two vectors is composed of a scalar part, their scalar product, and a bivector part. The bivector part correspond to the oriented area of the parallelogram constructed with the two vectors like is shown in the figure below:

An illustration of a vector, a bivector and a volume, equivalent to a pseudo scalar (image from Wikipedia)

GA computations in euclidean space

If you want to use the GA space to make computations you will need to "represent" the elements of each space. With my surprise I discovered that a generic multivector for tridimensional space can be represented by a 2x2 complex matrix.

A rapid calculation shows that the dimensions of the space is fine: 2x2 complex matrix have dimension 8 just like tridimensional GA space.

The interest of this representation is that the GA product corresponds to the ordinary matrix product.

What is the actual matrix representation ?

Well, the easy one is the unit scalar. We can easily guess that it does correspond to the unitary matrix

but things get slightly more interesting for vectors.

If we call \(\hat{\mathbf{x}}\), \(\hat{\mathbf{y}}\), \(\hat{\mathbf{z}}\) the basis vectors, their matrix counterparts should satisfy the following equalities

It can be verified that the properties above are verified by hermitian matrices so that we can write

Once we have the vectors we can derive the matrices for bivectors by taking their products to obtain

Finally the pseudo-scalar is obtained as the product of the three basis vector

Since this latter matrix is equal to \(i I\) we can simply identify the imaginary unit with the unitary pseudo-scalar \(\mathbf{i}\).

And so, what ?

From the practical point of view it means that you can represent a vector of components \((v_x, v_y, v_z)\) with the matrix

The vector addition can be performed simply by taking the matrix sum.

The multiplication of two vectors yield a bivector whose general matrix representation is

These relation let us compute the matrix representation of any vector or bivector but we need also to perform the opposite operation: given a complex matrix, extract its scalar, vector, bivector and imaginary parts.

This is actually quite trivial to work out. For any complex matrix

the scalar part, real plus imaginary, is simply \(\frac{s + t}{2}\). The vector part is given by

With the relation above you can extract the scalar and vector part for any given complex matrix.

Rotations

Rotations of a vector \(\mathbf{v}\) can be expressed in GA using the relation

where \(\hat{\mathbf{u}}\) is the versor oriented along the rotation axis.

So if you want to compute rotations you can do it by using the exponential of a bivector. This can be obtained very easily using the relation

The relation above does not let you compute the exponential of any 2x2 complex matrix. It only work for matrices that represents bivectors. You can easily extend the formula to take into account a scalar component but things get complicated for the vector part.

Actually the exponential of a vector involves the hyperbolic sinus and cosinus as given by the relation

but the exponential of a combination of vector and bivectors is more complicated (I confess I do not know the explicit formula).

Anyway this is not really a problem since for rotations you only need to take the exponential of bivectors plus real numbers.

A silly example...

Let us make an example to compute a rotation of 30 degree of a vector \(\mathbf{b} = 1/2 \, \hat{\mathbf{x}}+\hat{\mathbf{z}}\) around the z axis. In matrix representation we have

The rotation of 30 degree is given by

which, in matrix representation becames

and by carrying out the products we obtain

which is the expected results, since rotation around the z axis transform the x component into a mix of x and y with coefficients \(\cos(\pi/6)\) and \(\sin(\pi/6)\).

Le coup de scène

We said above that to generate rotations we only need bivectors and real numbers. It turns out that they constitute a subalgebra of the GA euclidean space. It is called the even subalgebra and it is isomorphic to quaternions. This shows that quaternions and bivectors are essentially equivalent representation and both are inherently related to rotations.

To terminate this post, the reader who already know quantum physics have probably noted that the basis vectors in the matrix representation are actually the Pauli matrices. What is interesting is that in Geometric Algebra they appear naturally as a representation of the basis vector and they are not inherently related to quantum phenomenons.

From the practical point of view we have seen that 2x2 complex matrix can be used to compute geometrical operations in an elegant and logical way. The matrix representation is also reasonable for practical computations even if it is somewhat redundant since it always represent the most general multi-vector with 8 components.